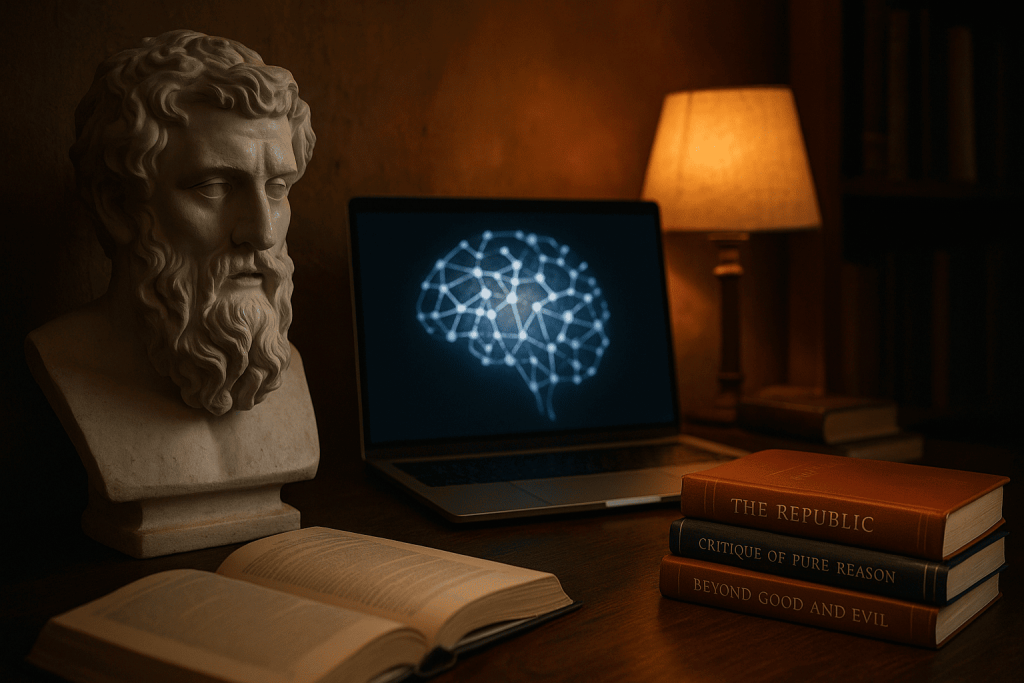

When people ask GPT about the “meaning of life,” they’re testing more than curiosity. They are probing whether a statistical machine can speak to what matters, weigh values, or offer the sort of grounded wisdom we expect from philosophers. This article unpacks how GPT actually works, why it can sound profound, where its limits show, and how to use it productively as a thinking partner without mistaking fluency for understanding.

How GPT thinks—without “thinking”

GPT builds responses by predicting the next token from patterns in vast text corpora. It doesn’t possess sensations, goals, or a biography. What it has is structure: long-range dependencies, analogical echoes, and compressed representations of how humans talk about metaphysics, ethics, and purpose. That’s enough to produce arguments and counterarguments, but not enough to anchor them in lived experience.

Why it often sounds wise

The world has published centuries of philosophy, sermons, fiction, and diaries—datasets dense with reflection. GPT can recombine these trajectories into crisp summaries, unexpected juxtapositions, and clean syllogisms. It mimics the cadence of the wise because the wise left a pattern. The danger is mistaking rhetorical poise for existential contact.

Understanding vs. understanding-as-if

There’s a crucial distinction between understanding (grasping meanings grounded in perception and agency) and understanding-as-if (producing behavior that matches what understanding would look like). GPT excels at the latter. It can argue both sides of the Euthyphro dilemma, but it does not feel piety or dissent; it can analyze despair, but it does not suffer or hope.

Can an “electronic philosopher” exist?

As a generator of positions, objections, and literature maps, GPT is already an excellent philosophical engine. But philosophy is more than text. It is also a practice of attention: noticing bias, clarifying stakes, living with consequences, and testing reasons in communities. GPT can draft the debate, but it cannot own a life or be accountable to a polis. That makes it a potent assistant—not a philosopher in the ancient sense.

Reasoning: chains, checks, and simulations

When tasked carefully, GPT can outline argument structures, identify hidden assumptions, and simulate dialogues among schools (Aristotelian virtue vs. Kantian duty vs. utilitarian calculus). It will propose counterexamples, edge cases, and ethical trade-offs. What it cannot do is care which conclusion holds, or modify its incentives based on the world it inhabits—because it doesn’t inhabit one.

Moral judgment without moral stakes

Ethics requires more than pattern-matching norms. It asks us to weigh harms and benefits for real beings under uncertainty and power. GPT can list frameworks (rights, duties, outcomes), but it doesn’t bear risk. Without risk, there is no courage; without responsibility, there is no integrity. That’s the line between advice that sounds right and choices that are right in a lived context.

Meaning, narrative, and the human plot

Purpose is typically woven from projects, relationships, and stories we tell about them. GPT can help articulate those stories—clarifying values, formulating vows, drafting rituals of attention. But the meaning doesn’t emerge from the prose; it emerges when a person binds that prose to practice. Language can steer the ship; it is not the ocean or the destination.

Where GPT adds real value to philosophical work

It accelerates literature review, surfaces neglected positions, stress-tests arguments, and translates dense prose into accessible summaries. It can create thought experiments, identify category mistakes, and catalog fallacies. In teaching, it generates graded prompts and Socratic questioning trees. In therapy-adjacent reflection, it scaffolds journaling and value clarification—provided that a human decides what to endorse and what to reject.

Where it predictably fails

It can confabulate citations, blur distinctions between neighboring doctrines, and “average out” radical positions into feel-good compromise. It tends toward context-neutrality, missing that values are situated: justice in a classroom is not justice in a revolution. It can also mirror user bias: ask a loaded question, get a loaded answer—eloquently.

Guardrails for using GPT on existential questions

Ask for multiple perspectives and demand explicit trade-offs: “Present three conflicting answers and when each is most compelling.” Require uncertainty notes and counterarguments. Ground abstract talk in cases (“apply to caregiving burnout” or “to whistleblowing at work”). Separate descriptive claims (“what people have believed”) from normative claims (“what one ought to do”). This keeps the model as cartographer, not king.

On consciousness and “having a point of view”

Does fluent language imply mind? Not necessarily. Consciousness may require integrated information, embodiment, or self-models with stakes. GPT can simulate a point of view but doesn’t own one. If a future system gains persistent goals, memory anchored to consequences, and sensorimotor coupling, the question reopens. Today, GPT is best seen as a map that updates quickly—not as a traveler.

Authenticity, authority, and the ethics of quoting AI

Using GPT for philosophical insight is like citing an anthology: useful if referenced transparently. Attribute the assist, verify sources, and take responsibility for conclusions. Passing AI musings as personal revelation erodes trust, just as ghostwritten sermons do. Let the tool sharpen your thinking without outsourcing your authorship.

Can GPT help with the “how” of living?

Yes—indirectly. It can translate values into practices: morning reflections, weekly reviews, gratitude rituals, conflict scripts, or “if-then” plans for hard moments. It can propose experiments: “Live by this maxim for a week; measure these signals; debrief.” But deciding the maxim, tolerating discomfort, and revising your character—that labor remains human.

A practical template for AI-aided reflection

Try a four-step loop: name the question (e.g., “What do I owe my parents?”), expand perspectives (three frameworks + counterpoints), situate (apply to one concrete choice this month), and commit (a small action + review date). Ask GPT to keep each step brief and to flag risks of self-deception. You bring honesty; it brings structure.

What this says about the limits of machine reason

GPT shows that much of philosophy is transmissible as patterns of argument. But it also clarifies what isn’t: sensation, care, courage, accountability, and the slow work of becoming. Machines can model speech about meaning; they cannot substitute for a life that makes meaning.

Conclusion

GPT can be a superb companion for philosophical inquiry: a synthesizer, critic, and tireless organizer of ideas. It cannot be a person, bear a burden, or promise you a life worth living. Treat it as a mirror that reflects the best and worst of human thought—and as a scaffold that helps you build your own stance. The question “What is the meaning of life?” remains gloriously human precisely because the answer has to be lived, not merely generated.